Digital Extended Reality

In short

Digital Extended Reality technologies combine advanced computing systems (hardware and software) that can change how people connect with each other and their surroundings and influence or manipulate human actions through interactions with virtual environments.

Key ethical concerns surround cybersecurity and how these technologies may impact human behavioural and social dynamics. For example, technology mimicking human responses may give rise to responses as though it were actually human, while developments in Extended Reality may lead to undue influence from ‘nudging’ techniques.

More about Digital Extended Reality

Digital Extended Reality could change how people connect with each other and their surroundings in physical and virtual settings.

We include two many technologies in this family: Extended Reality (XR), which relates to virtual and simulated experiences using digital technologies, and Natural Language Processing (NLP), which allows computer systems to process and analyse a vast quantity of human natural language information (e.g., voice, text, images) and generate text in natural or artificial languages. These two technologies can stand alone or be combined in certain devices. You can explore specific examples that fall in these categories below.

Potential ethical repercussions of such technologies include cognitive and physiological impacts as well as behavioural and social dynamics, such as influencing users’ behaviours, and monitoring and supervising people.

-

XR: Virtual Reality

A virtual reality (VR) environment is completely simulated by digital means for its user. Currently, simulating VR focuses on visual aspects, but other senses are also being incorporated into these experiences. -

XR: Augmented Reality

Augmented Reality (AR) combines elements of real and virtual environments instead of trying to achieve complete immersion in virtual reality. Users can see the real world, with virtual objects superimposed upon or combined with the real environment. -

XR: Avatars and the metaverse

A metaverse emphasizes the social element of XR: multiple users can interact in one virtual or augmented enviroment. Avatars usually represent real people (or at least an animated version of them) and can be customised to some extent according to users’ preferences. -

XR: Digital Twins

These are digital replicas of physical objects that can possess dynamic features like the synchronisation of data between the physical twin and the digital twin to monitor, simulate, and optimize the physical object. -

NLP: Text generation and analysis

Learning procedures applied on big datasets of original text have allowed large language models (LLMs) to generate text at a level close to humans. In addition, techniques can analyse language content for its sentiments or opinions, understanding how the general public or a specific group feel about issues, events or topics. -

NLP: Chatbots

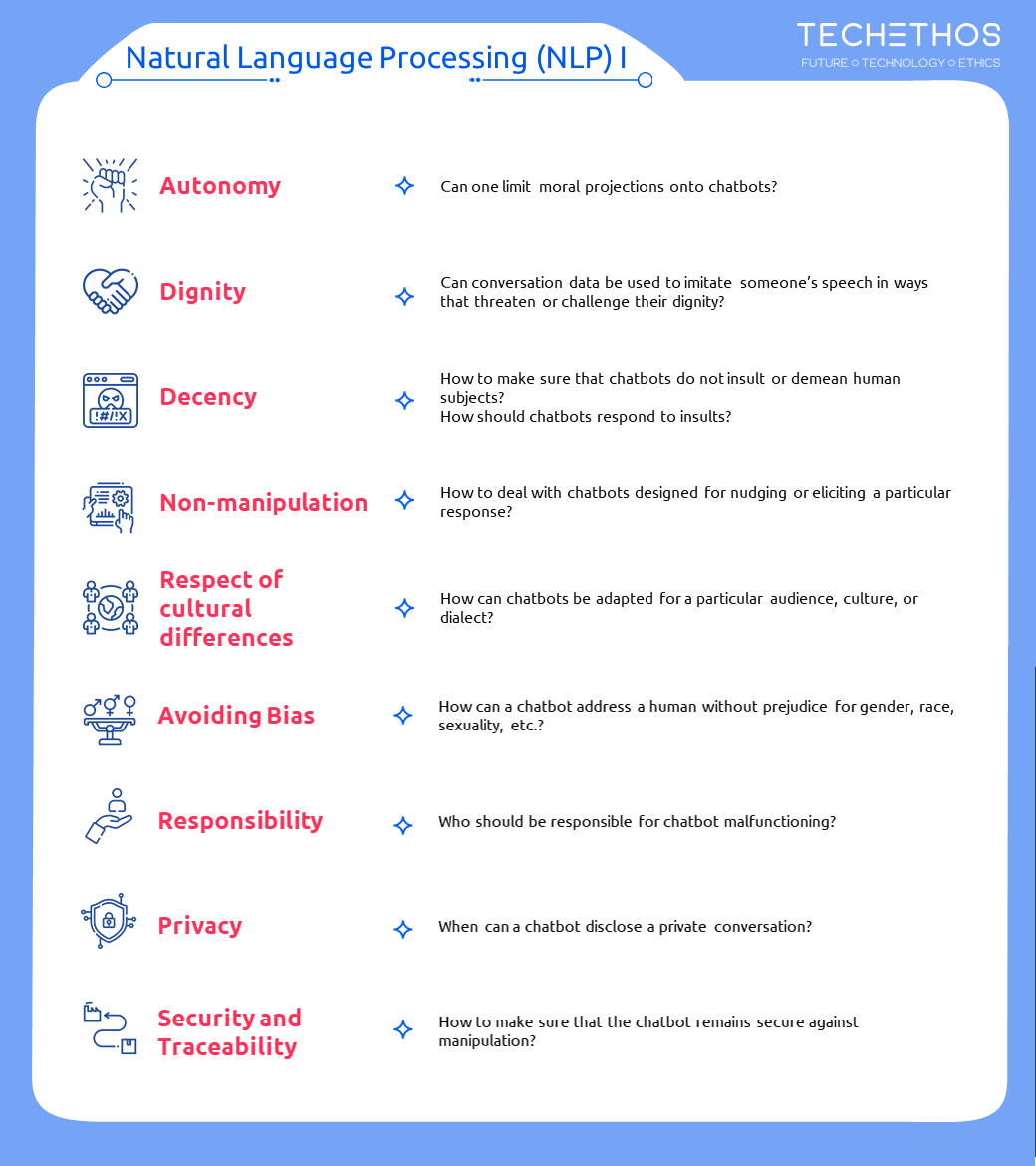

Conversational agents, or chatbots, use NLP to interact with users, orally or in writing. They already provide a wide array of services in customer support or with voice assistants. -

NLP: Affective Computing

Through subtle psychological strategies in dialogue, such as prioritising certain topics or directing the conversation in a direction, a chatbot can influence what another person thinks or believes. Ultimately, this can nudge the user to change their behaviour without forcing them, which is known as nudging.

Ethical analysis

‘Ethics by design’ is at the core of TechEthos. It was necessary to identify the broad array of values and principles at stake in Digital Extended Reality, to be able to include them from the very beginning of the process of research and development. Based on our ethical analysis, we will propose how to enhance or adjust existing ethical codes, guidelines or frameworks.

XR and NLP are treated as self-sufficient, standalone technologies in the analysis below, but our in-depth reports also look at the ethical issues raised by their combination.

In there a preference for material reality?

The emergence of virtual reality prompts the question of whether virtual experiences mediated via XR are equivalent to experiences gained in the real world: do they evoke similar emotions, behaviours or judgements?

Mode of being of virtual objects

Digital objects are the types of things we experience in the digital world, like “an image” or “a video”. However, it is not clear how they can be individual objects if all they consist of is digital data. The philosophy of digital objecthood features several position. A moderate one is that digital objects exist insofar as they are experienced and conceptualised by a digital mind. A more radical position claims that virtual objects and environments are of the same nature as material objects and environments.

Value of virtual objects

If a distinction between virtual objects and material objects is kept, consequences of actions in material reality certainly do not equal the consequences of actions in virtual reality. For example, driving fast in virtual reality does not imply the same risk as driving fast on a material road.

Nevertheless, some scholars have argued that virtual objects do retain some ethical value, not because of the equivalent consequences involved, but because values or behaviour patterns formed in XR can influence behaviours in the real world, for example in speeding on a road in one’s actual car, with negative consequences.

More core ethical dilemmas are tackled in the ‘Analysis of Ethical Issues’ report.

Training: knowledge transfer and qualia

One of the most established applications of XR is in training skills. The areas in which XR training applications are the most impactful usually include high-risk or costly material training conditions, such as traing for pilots and surgeons. Are skills acquired via virtual experiences equivalent or transferable to material conditions?

Remote work: long-term effects on workers and the job market

XR work environments are available on the market and allow coworkers to host meetings and interact at a distance, sometimes using avatars. The ethical challenges associated with its use include the potential overuse of this always-accessible mode of work, impact on local job markets, and the collection of workers’ data, among others.

More applications and use cases are tackled in the ‘Analysis of Ethical Issues’ report.

NLP systems lack human reasoning

Today most chatbots are deterministic models without machine learning. They take the user down a decision tree in a predetermined way. However, the most advanced NLP techniques, capable of varied conversation on many topics with nearly human-level outputs, rely on statistical linguistic analysis. They do not involve any understanding of meaning or semantics. Void of intention and disconnected from action and responsibility, they cannot be considered on a par with language produced by human speakers. However, humans might take the chatbot’s language to be meaningful and react to its semantic content.

Artificial emotions influence human users

Some applications use conversational agents to influence their users through the architecture or language of the dialogue. Manipulation by a conversational agent can be direct (including inaccurate or skewed information) or indirect, using the “nudging” strategies.

More core ethical dilemmas are tacked in the ‘Analysis of Ethical Issues’ report.

Human resources: gender bias, data protection and labour market

Chatbots are used by human resources managers for recruitment as well as for career follow-up and employee training. The training data used have been found to be biased, especially against margionalised populations. This can tlead to different types of harm, in terms of how these populations are represented and what resources or opportunities, such as jobs, are allocated to them.

Creativity: authenticity

NLP can be used to generate seemingly creative or poetic text that has no human creative input or that relies on prior creative work. If such applications were used at scale, it might reduce the profitability of creative or innovative work.

More applications and use cases are tackled in the ‘Analysis of Ethical Issues’ report.

Legal analysis

While no international or EU law directly addresses or explicitly mentions Digital Extended Reality, many aspects are subject to international and EU law. Below, you can explore the legal frameworks and issues relevant to this technology family and read about the next steps in our legal analysis.

XR has the potential to impact human rights in many ways, both positive and negative. In relation to some rights in particular context, XR has the potential to enhance enjoyment of rights, such as when XR provides safer workplace training modules that help support the right to just and favourable conditions of work. Yet in other ways, the use of XR interferes with and may even violate human rights.

XR technologies collect and process a variety of different data to create an interactive and/or immersive experience for users. The gathering of such data, however, raises concerns relating to privacy and data protection. On this, it has been suggested that there are three factors in relation to XR technologies generally and VR/AR devices specifically which, in combination, present potentially serious privacy and data protection challenges. These factors are: (i) the range of different information gathering technologies utilised in XR, each presenting specific privacy risks; (ii) the extensive gathering of data which is sensitive in nature, as distinct from the majority of other consumer technologies; and (iii) the comprehensive gathering of such data being an essential aspect of the core functions of XR technologies.

Collectively, these factors highlight the ongoing tension between the necessity of collecting intimate data to enable the optimal immersive or interactive experience in XR, balanced against the requirement to uphold rights to privacy and data protection under international and EU law. While these legal frameworks do not specifically address or explicitly refer to XR technologies, many of the relevant provisions are directly applicable.

Consumer rights and consumer protection law are designed to hold sellers of goods and services accountable when they seek to profit, for example by taking advantage of a consumer’s lack of information or bargaining power. Some conduct addressed by consumer rights laws is simply unfair, while other conduct might be fraudulent, deceptive, and/or misleading.

Consumer rights are particularly important in the XR context, as the AR/VR market share is expected to increase by USD 162.71 billion from 2020 to 2025, and the market’s growth momentum to accelerate at a CAGR of 46% (with growth being driven by increasing demand).

The use of XR is already transforming diverse industries (healthcare, manufacturing) and at the same time changing culture, travel, retail/ecommerce, education, training, gaming and entertainment (the latter two being the most significant).

As many XR applications integrate AI systems, any laws governing AI would apply to those XR applications. While there are no international laws governing AI specifically, the EU has proposed a regulatory framework dedicated to AI governance.

This framework, which includes a proposed AI Act, does not mention XR, but would apply (if adopted as written) to any XR technology using AI. It should be also noted that not all XR technologies utilise AI technologies and would, therefore, not be subject to any proposed AI regulation. For example, chatbots can be developed using AI-based NLP approaches or using an extensive word database (not AI-based).

Since many XR applications provide services in the online environment, any laws governing the provision of digital services would apply to those XR systems. While there are no international laws governing digital services specifically, the EU has proposed a regulatory framework dedicated to the governance of digital services. This framework, which includes a proposed Digital Services Act, does not mention XR explicitly but would apply (if adopted as written) to providers of XR offering services in the digital environment.

In addition to analyzing the obligations of States under international law and/or the European Union, the project conducted a comparative analysis of the national legislation of three countries: Italy, France, and the United Kingdom.

While laws explicitly governing the use of XR is limited, France and Italy are particularly influenced by the EU law in relation to XR and ongoing legal developments – including the proposed AI Act, Digital Services Act (DSA), Digital Markets Act (DMA). Despite leaving the EU, the UK has retained various EU laws. In the long-term, however, the UK law relevant for XR technologies might diverge from the EU law.

We complemented this analysis with a further exploration of overarching and technology-specific regulatory challenges. We also presented options for enhancing legal frameworks for the governance of XR at the international and national level.

Societal analysis

This type of analysis is helping us bring on board the concerns of different groups of actors and look at technologies from different perspectives.

TechEthos asked researchers, innovators, as well as technology, ethical, legal and economic experts, to consider several future scenarios for our selected technologies and provide their feedback regarding attitudes, proposals and solutions.

Read the policy noteFrom June 2022 until January 2023, the six TechEthos science engagement organisations conducted a total of 15 science cafés involving 449 participants. These science cafes were conducted in: Vienna (Austria), Liberec (Czech Republic), Bucharest (Romania), Belgrade (Serbia), Granada (Spain), and Stockholm (Sweden).

Science Cafés had a two-fold objective: build knowledge (e.g., ethics, technological applications, etc.) about the selected families of technologies: climate engineering, neurotechnologies and digital extended reality as well as recruit participants for multi-stakeholder events.

Seven out of 15 science cafés were dedicated to the Extended Reality technology family.

An important perspective the TechEthos project wanted to highlight alongside expert opinions was the citizen perspective. To encourage participation and facilitate conversation, an interactive game (TechEthos game: Ages of Technology Impact) was developed to discuss the ethical issues related to digital extended reality. The goal of this exercise was to understand citizens awareness and attitudes towards these emerging technologiesTechnologies whose development and application are not completely realised or finished, and whose potential lies in the future. […] to provide insight into what the general public finds important.

The six TechEthos science engagement organisations conducted a total of 20 scenario game workshops engaging a wide audience from varied backgrounds.

From the workshop comments the citizen value categories were extracted through qualitative coding, allowing for comparisons across all workshops. Each technology family exhibits distinct prominent values.

NLP and XR highlight authentic human connection, experience, and responsible use, considering their aim to simulate human interaction. Safety and reliability are important across all three families. Ecosystem health is a shared concern across all families. In particular, NLP might pose ecological challenges as it might lead to an exponential increase in the required server capacity, causing additional CO2 emissions and increasing the depletion of rare earths, fossil fuels and other limited resources.

Media discourse on technologies both reflects and shapes public perceptions. As such, it is a powerful indicator of societal awareness and acceptance of these technologies. TechEthos carried out an analysis of the news stories published in 2020 and 2021 on our three technology families in 13 EU and non-EU countries (Austria, Czech Republic, France, Germany, Ireland, Italy, Netherlands, Romania, Serbia, Spain, Sweden, UK, and USA). This used state-of-the-art computational tools to collect, clean and analyse the data.

A noteworthy finding related to digital extended reality is that this family of technologies is primarily discussed with reference to virtual reality. Indeed, the term is mentioned in almost 42% of the stories collected for this family of technologies. On the contrary, natural language processing (NLP) is rarely mentioned. This suggests that the general public might have more awareness of virtual reality than with NLP techniques. This finding is also of interest to TechEthos public engagement activities, stressing the need for more effort to raise public awareness of NLP. Keywords related to Ethical, Legal, and Social Issues (ELSI) were mentioned in 35% of the overall news stories collected for digital extended reality, with terms ‘society’, ‘security’ and ‘privacy’ being the most frequently mentioned ELSI topics.

Read the reportOur Recommendations

Explore the project recommendations to enhance the EU legal framework and the ethical governance of this technology family.

This policy brief explores the ethical challenges of XR and NLP within the expansive realm of General Purpose AI.

This brief delves into human-machine dynamics, ethical data usage, and the urgent need for operational norms and standards in the AI domain.

We addressed the ethical challenges of XR and NLP. These topics belong to the larger area of General Purpose Artificial Intelligence.

This policy brief lists new and emerging issues to supplement, enhance and update the Assessment List for Trustworthy Artificial Intelligence (ALTAI) developed by the High-Level Expert Group on AI. Based on our analysis, we formulate specific recommendations for AI regulation.