ALLEA publishes 2023 revised edition of The European Code of Conduct for Research Integrity

ALLEA publishes 2023 revised edition of The European Code of Conduct for Research Integrity

Authored by: Mathijs Vleugel (ALLEA)

Reviewed by: Greta Alliaj (Ecsite)

On 23 June 2023, ALLEA released the 2023 revised edition of “The European Code of Conduct for Research Integrity”, which takes account of the latest social, political, and technological developments, as well as trends emerging in the research landscape. These revisions took place in the context of the EU-funded TechEthos project, with the aim to also identify gaps and necessary additions related to the integration of ethics in research protocols and the possible implications of new technologies and their applications.

Together, these changes help ensure that the European Code of Conduct remains fit for purpose and relevant to all disciplines, emerging areas of research, and new research practices. As such, the European Code of Conduct can continue to provide a framework for research integrity to support researchers, the institutions in which they work, the agencies that fund them, and the journals that publish their work.

The Chair of the dedicated Code of Conduct Drafting Group, Prof. Krista Varantola, launched the new edition under the auspices of ALLEA’s 2023 General Assembly in London, presenting the revised European Code of Conduct to delegates of ALLEA Member Academies in parallel with its online release to the wider research community.

The 2023 revised edition

The revisions in the 2023 edition of the European Code of Conduct echo an increased awareness of the importance of research culture in enabling research integrity and implementing good research practices and place a greater responsibility on all stakeholders for observing and promoting these practices and the principles that underpin them. It likewise accommodates heightened sensibilities in the research community to mechanisms of discrimination and exclusion and the responsibility of all actors to promote equity, diversity, and inclusion.

The revised European Code of Conduct also takes account of changes in data management practices, the General Data Protection Regulation (GDPR), as well as recent developments in Open Science and research assessment. In the meantime, Artificial Intelligence tools and social media radically change how research results are produced and communicated, and the revised European Code of Conduct reflects the challenges these technologies pose to uphold the highest standards of research integrity.

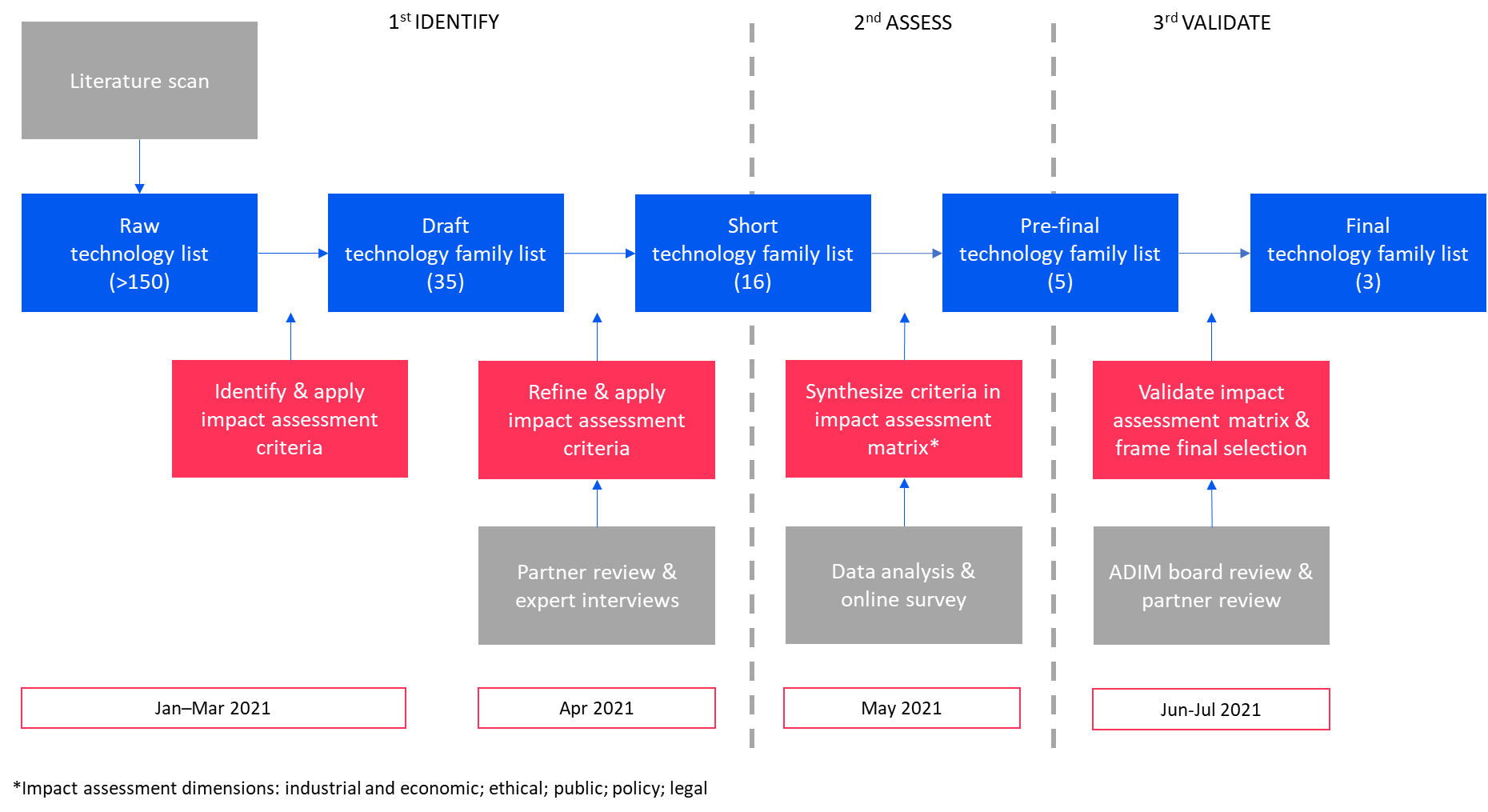

The revisions process

From early 2022, the Drafting Group, consisting of members of the ALLEA Permanent Working Group on Science and Ethics, set about exploring what changes would be needed to update the 2017 edition of the European Code of Conduct to ensure it reflects the current views on what are considered good research practices. Their work culminated in October 2022 in a draft revised document being sent for consultation to leading stakeholder organisations and projects across Europe, including representative associations and organisations for academia, publishers, industry, policymaking, and broader societal engagement.

The response to this stakeholder consultation was exceptional, indicating a sense of ownership and engagement with the European Code of Conduct amongst the research community. As part of this stakeholder consultation process, the views of the TechEthos consortium partners were collected both in writing and during an online workshop.

All feedback was captured and discussed in detail in February 2023 by the Drafting Group. A summary of the stakeholder feedback process and how this informed the 2023 revision can be found at: https://allea.org/code-of-conduct/.

Share: